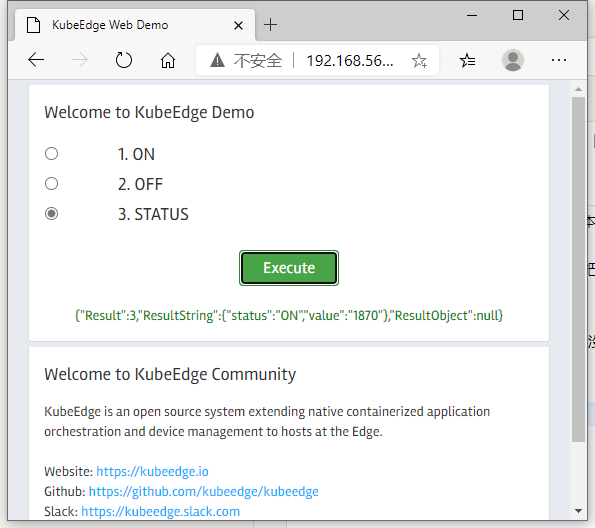

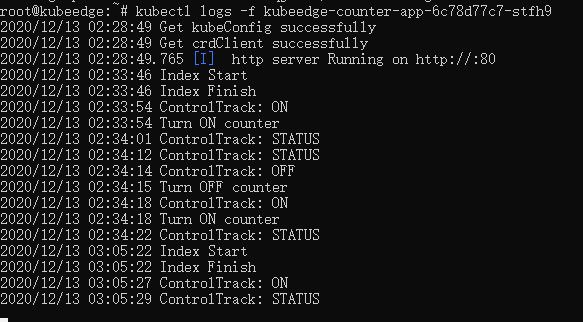

kubeedge-counter-demo(完结) 2020-12-13 笔记,实验 8 条评论 7335 次阅读 排错过程很不顺利,结论是Device协议版本不兼容,例程是v1alpha1版本,环境(KubeEdge v1.5.0)是v1alpha2版本。 把例程改用v1alpha2,就编译不了例程;把环境改用v1alpha1,就运行不了环境。 用v1.3.1版本的KubeEdge,就什么问题都没有了,一遍通过。   [TOC] # 用1.3.1版本的KubeEdge试试 ## 回档KubeEdge和Examples ``` # 回档到v1.3.1的KubeEdge cd $GOPATH/src/github.com/kubeedge/kubeedge rm -rf * git reset --hard 5bfca35 # 回档到10月22号的Examples cd $GOPATH/src/github.com/kubeedge/examples rm -rf * git reset --hard cc38a1a ``` ## 编译 ``` cd $GOPATH/src/github.com/kubeedge/kubeedge make all WHAT=cloudcore make all WHAT=edgecore make all WHAT=keadm cp _output/local/bin/keadm /usr/bin/ # Cloud和Edge cp _output/local/bin/cloudcore /usr/local/bin/ # Cloud cp _output/local/bin/edgecore /usr/bin/ # Edge ``` ## 清理旧版KubeEdge 1. 用 keadm 清理 ``` keadm reset ``` 2. 清理kind集群 ``` kind delete cluster ``` 3. 清理用不到的镜像 ``` # 查看现有的镜像 docker images # 逐个删除镜像 docker image rm <镜像id> ``` 4. 清理edgecore用到的数据库 ``` rm /var/lib/kubeedge/edgecore.db ``` 5. 清理kubeedge目录 ``` rm -rf /etc/kubeedge mkdir /etc/kubeedge mkdir /etc/kubeedge/config ``` 6. 删除kubeedge名空间 ``` kubectl delete namespace kubeedge ``` ## 部署KubeEdge ### K8s-Master节点(Kind) ``` kind delete cluster rm /root/kind.yaml vim /root/kind.yaml ``` 输入内容:(192.168.56.103是Cloud相对于Edge的IP),后面的80和8080是额外开放的端口 ``` kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 networking: apiServerAddress: "192.168.56.103" apiServerPort: 6443 nodes: - role: control-plane image: kindest/node:v1.19.3 extraPortMappings: - containerPort: 80 hostPort: 80 protocol: TCP - containerPort: 8080 hostPort: 8080 protocol: TCP ``` 创建kind ``` # 创建k8s master节点 kind create cluster --config=/root/kind.yaml # 重新把k8s的Server端配置文件复制到edge端 scp -r /root/.kube/config root@192.168.56.101:/root/.kube/config ``` ### 给k8s master 安装基础工具 ``` # 进入k8s控制面的容器 docker exec -it kind-control-plane /bin/bash ``` #### 看系统版本 ``` cat /etc/issue ``` > ``` > root@kind-control-plane:/# cat /etc/issue > Ubuntu Groovy Gorilla (development branch) \n \l > ``` > > 到`[ubuntu | 镜像站使用帮助 | 清华大学开源软件镜像站 | Tsinghua Open Source Mirror](https://mirror.tuna.tsinghua.edu.cn/help/ubuntu/) ,选版本20.10。 #### 改软件源,安装net-tools和vim ``` mv /etc/apt/sources.list /etc/apt/sources.list.bak echo "deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ groovy main restricted universe multiverse" >/etc/apt/sources.list echo "deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ groovy-updates main restricted universe multiverse" >>/etc/apt/sources.list echo "deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ groovy-backports main restricted universe multiverse" >>/etc/apt/sources.list echo "deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ groovy-security main restricted universe multiverse" >>/etc/apt/sources.list apt-get update apt-get install -y net-tools vim ``` ### 开放HTTP 8080端口 ``` # 进入kind-control-plane容器 docker exec -it kind-control-plane /bin/bash # 修改api-server的配置文件 cd /etc/kubernetes/manifests vim kube-apiserver.yaml # 1. 将里面的`- --insecure-port=0`的`0`改成`8080`。 # 2. 添加`--insecure-bind-address=0.0.0.0` # 重启 exit docker restart kind-control-plane ``` ### 部署cloudcore #### 注册设备协议和同步协议 ``` cd $GOPATH/src/github.com/kubeedge/kubeedge/build/crds/devices kubectl apply -f ./devices_v1alpha1_device.yaml kubectl apply -f ./devices_v1alpha1_devicemodel.yaml cd $GOPATH/src/github.com/kubeedge/kubeedge/build/crds/reliablesyncs kubectl apply -f objectsync_v1alpha1.yaml kubectl apply -f cluster_objectsync_v1alpha1.yaml ``` #### ~~自签证书~~ > **注意:v1.3.1不需要用certgen.sh手动自签证书。如果已经签了,执行以下操作:** > > ``` > rm -rf /etc/kubeedge/ca > rm -rf /etc/kubeedge/certs > kubectl delete namespace kubeedge > ``` ``` vim /etc/kubeedge/certgen.sh ``` `esc` ,`:set paste` ,`i`,粘贴以下内容: ``` #!/usr/bin/env bash set -o errexit readonly caPath=${CA_PATH:-/etc/kubeedge/ca} readonly caSubject=${CA_SUBJECT:-/C=CN/ST=Zhejiang/L=Hangzhou/O=KubeEdge/CN=kubeedge.io} readonly certPath=${CERT_PATH:-/etc/kubeedge/certs} readonly subject=${SUBJECT:-/C=CN/ST=Zhejiang/L=Hangzhou/O=KubeEdge/CN=kubeedge.io} genCA() { openssl genrsa -des3 -out ${caPath}/rootCA.key -passout pass:kubeedge.io 4096 openssl req -x509 -new -nodes -key ${caPath}/rootCA.key -sha256 -days 3650 \ -subj ${subject} -passin pass:kubeedge.io -out ${caPath}/rootCA.crt } ensureCA() { if [ ! -e ${caPath}/rootCA.key ] || [ ! -e ${caPath}/rootCA.crt ]; then genCA fi } ensureFolder() { if [ ! -d ${caPath} ]; then mkdir -p ${caPath} fi if [ ! -d ${certPath} ]; then mkdir -p ${certPath} fi } genCsr() { local name=$1 openssl genrsa -out ${certPath}/${name}.key 2048 openssl req -new -key ${certPath}/${name}.key -subj ${subject} -out ${certPath}/${name}.csr } genCert() { local name=$1 openssl x509 -req -in ${certPath}/${name}.csr -CA ${caPath}/rootCA.crt -CAkey ${caPath}/rootCA.key \ -CAcreateserial -passin pass:kubeedge.io -out ${certPath}/${name}.crt -days 365 -sha256 } genCertAndKey() { ensureFolder ensureCA local name=$1 genCsr $name genCert $name } gen() { ensureFolder ensureCA local name=$1 local ipList=$2 genCsr $name #local name=$1 #openssl x509 -req -in ${certPath}/${name}.csr -CA ${caPath}/rootCA.crt -CAkey ${caPath}/rootCA.key \ #-CAcreateserial -passin pass:kubeedge.io -out ${certPath}/${name}.crt -days 365 -sha256 SUBJECTALTNAME="subjectAltName = IP.1:127.0.0.1" index=1 for ip in ${ipList}; do SUBJECTALTNAME="${SUBJECTALTNAME}," index=$(($index+1)) SUBJECTALTNAME="${SUBJECTALTNAME}IP.${index}:${ip}" done echo $SUBJECTALTNAME > /tmp/server-extfile.cnf # verify openssl x509 -req -in ${certPath}/${name}.csr -CA ${caPath}/rootCA.crt -CAkey ${caPath}/rootCA.key \ -CAcreateserial -passin pass:kubeedge.io -out ${certPath}/${name}.crt -days 5000 -sha256 \ -extfile /tmp/server-extfile.cnf #verify openssl x509 -in ${certPath}/${name}.crt -text -noout } stream() { ensureFolder readonly streamsubject=${SUBJECT:-/C=CN/ST=Zhejiang/L=Hangzhou/O=KubeEdge} readonly STREAM_KEY_FILE=${certPath}/stream.key readonly STREAM_CSR_FILE=${certPath}/stream.csr readonly STREAM_CRT_FILE=${certPath}/stream.crt readonly K8SCA_FILE=/etc/kubernetes/pki/ca.crt readonly K8SCA_KEY_FILE=/etc/kubernetes/pki/ca.key if [ -z ${CLOUDCOREIPS} ]; then echo "You must set CLOUDCOREIPS Env,The environment variable is set to specify the IP addresses of all cloudcore" echo "If there are more than one IP need to be separated with space." exit 1 fi index=1 SUBJECTALTNAME="subjectAltName = IP.1:127.0.0.1" for ip in ${CLOUDCOREIPS}; do SUBJECTALTNAME="${SUBJECTALTNAME}," index=$(($index+1)) SUBJECTALTNAME="${SUBJECTALTNAME}IP.${index}:${ip}" done cp /etc/kubernetes/pki/ca.crt ${caPath}/streamCA.crt echo $SUBJECTALTNAME > /tmp/server-extfile.cnf openssl genrsa -out ${STREAM_KEY_FILE} 2048 openssl req -new -key ${STREAM_KEY_FILE} -subj ${streamsubject} -out ${STREAM_CSR_FILE} # verify openssl req -in ${STREAM_CSR_FILE} -noout -text openssl x509 -req -in ${STREAM_CSR_FILE} -CA ${K8SCA_FILE} -CAkey ${K8SCA_KEY_FILE} -CAcreateserial -out ${STREAM_CRT_FILE} -days 5000 -sha256 -extfile /tmp/server-extfile.cnf #verify openssl x509 -in ${STREAM_CRT_FILE} -text -noout } buildSecret() { local name="edge" genCertAndKey ${name} > /dev/null 2>&1 cat <<EOF apiVersion: v1 kind: Secret metadata: name: cloudcore namespace: kubeedge labels: k8s-app: kubeedge kubeedge: cloudcore stringData: rootCA.crt: | $(pr -T -o 4 ${caPath}/rootCA.crt) edge.crt: | $(pr -T -o 4 ${certPath}/${name}.crt) edge.key: | $(pr -T -o 4 ${certPath}/${name}.key) EOF } $1 $2 $3 ``` 保存退出 ``` # 设置权限 chmod 755 /etc/kubeedge/certgen.sh # 给edge证书 /etc/kubeedge/certgen.sh buildSecret scp -r /etc/kubeedge/certs root@192.168.56.101:/etc/kubeedge/ # 自签stream证书 mkdir -p /etc/kubernetes/pki docker exec kind-control-plane cat /etc/kubernetes/pki/ca.crt > /etc/kubernetes/pki/ca.crt docker exec kind-control-plane cat /etc/kubernetes/pki/ca.key > /etc/kubernetes/pki/ca.key export CLOUDCOREIPS="192.168.56.103" /etc/kubeedge/certgen.sh stream # 自签server证书 /etc/kubeedge/certgen.sh gen server 192.168.56.103 ``` #### 配置cloudcore ``` cloudcore --defaultconfig > /etc/kubeedge/config/cloudcore.yaml vim /etc/kubeedge/config/cloudcore.yaml ``` 改一下cloudHub的advertiseAddress,改成Cloud的外网IP #### 启动cloudcore ``` cloudcore ``` 获取Token ``` # 运行下面一行命令,相当于运行keadm gettoken kubectl get secret -n kubeedge tokensecret -o=jsonpath='{.data.tokendata}' | base64 -d && echo ``` ### 部署edgecore 以下在Edge进行 ``` edgecore --defaultconfig > /etc/kubeedge/config/edgecore.yaml vim /etc/kubeedge/config/edgecore.yaml ``` 1. httpServer、quic的server、websocker的server,改成Cloud的外网IP; 2. token粘贴一下刚才的Token 3. 下面的NodeIP改成Edge的外网IP。 4. 在hostnameOverride 改一下边缘节点的名字。(默认是kubeedge,我这里不改。) ``` edgecore ``` ## 部署Counter例程 ### 部署CRD配置文件 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds vim kubeedge-counter-instance.yaml ``` 1. 把`raspberrypi`改成边缘节点的名字。(要和刚才`/etc/kubeedge/config/edgecore.yaml`的hostnameOverride字段的名字一样) 2. 第一行最后,如果是v1alpha2的话,就改成v1alpha1。 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds vim kubeedge-counter-model.yaml ``` 同样把第一行最后的v1alpha2改成v1alpha1。 ``` kubectl create -f kubeedge-counter-model.yaml kubectl create -f kubeedge-counter-instance.yaml ``` ### 部署WebAPP #### 1. 修改代码 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app/utils vim kubeclient.go ``` 把`var KubeMaster = "http://127.0.0.1:8080"`的127.0.0.1改成192.168.56.103,保存退出 #### 2. 编译镜像 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app make docker build . -t pro1515151515/kubeedge-counter-app:v1.1.0 docker push pro1515151515/kubeedge-counter-app:v1.1.0 ``` #### 3. 删除旧部署 ``` kubectl delete deployment kubeedge-counter-app ``` 如果出现 xxx not found 是正常的,说明之前没有旧部署。 #### 4. 更新CRD文件 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds vim kubeedge-web-controller-app.yaml ``` > 把`image: kubeedge/kubeedge-counter-app:v1.1.0`改成 > > ``` > image: pro1515151515/kubeedge-counter-app:v1.1.0 > ``` #### 5. 部署 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds kubectl create -f kubeedge-web-controller-app.yaml ``` ### 部署counter设备 #### 1. 修改makefile文件 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/counter-mapper vim Makefile ``` 把`GOARCH=arm64`改成`GOARCH=amd64`。 #### 2. 编译镜像 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/counter-mapper make docker build . -t pro1515151515/kubeedge-pi-counter:v1.1.0 docker push pro1515151515/kubeedge-pi-counter:v1.1.0 ``` 如果最后一行超时,可以用3. 手动复制镜像到Edge端 #### 3. 复制镜像到Edge端 ``` docker save -o kubeedge-pi-counter.tar pro1515151515/kubeedge-pi-counter:v1.1.0 scp kubeedge-pi-counter.tar root@192.168.56.101:/root ``` 在Edge端执行: ``` docker load -i /root/kubeedge-pi-counter.tar ``` #### 4. 更新CRD文件 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds vim kubeedge-pi-counter-app.yaml ``` > 把`image: kubeedge/kubeedge-pi-counter:v1.0.0`改成 > > ``` > image: pro1515151515/kubeedge-pi-counter:v1.1.0 > ``` #### 5. 部署 ``` cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds kubectl create -f kubeedge-pi-counter-app.yaml ``` ## 查看运行状态 ### 查看节点状态 ``` kubectl get nodes ``` > ``` > root@kubeedge:~# kubectl get nodes > NAME STATUS ROLES AGE VERSION > kind-control-plane Ready master 13h v1.19.3 > kubeedge Ready agent,edge 12h v1.17.1-kubeedge-v1.3.1 > ``` ### 查看部署状态 ``` kubectl get deployments ``` > ``` > root@kubeedge:~# kubectl get deployments > NAME READY UP-TO-DATE AVAILABLE AGE > kubeedge-counter-app 1/1 1 1 4m55s > kubeedge-pi-counter 1/1 1 1 107s > ``` ### 查看Pod状态 ``` kubectl get pods ``` > ``` > root@kubeedge:~# kubectl get pods > NAME READY STATUS RESTARTS AGE > kubeedge-counter-app-6c78d77c7-stfh9 1/1 Running 0 5m29s > kubeedge-pi-counter-86f84fddff-6khqj 1/1 Running 0 2m21s > ``` ### 查看counter-app这一个Pod的日志 ``` kubectl logs -f kubeedge-counter-app-6c78d77c7-stfh9 ``` 打开192.168.56.103网页,点按钮,已经完全没问题了。 > ``` > root@kubeedge:~# kubectl logs -f kubeedge-counter-app-6c78d77c7-stfh9 > 2020/12/13 02:28:49 Get kubeConfig successfully > 2020/12/13 02:28:49 Get crdClient successfully > 2020/12/13 02:28:49.765 [I] http server Running on http://:80 > 2020/12/13 02:33:46 Index Start > 2020/12/13 02:33:46 Index Finish > 2020/12/13 02:33:54 ControlTrack: ON > 2020/12/13 02:33:54 Turn ON counter > 2020/12/13 02:34:01 ControlTrack: STATUS > 2020/12/13 02:34:12 ControlTrack: STATUS > 2020/12/13 02:34:14 ControlTrack: OFF > 2020/12/13 02:34:15 Turn OFF counter > 2020/12/13 02:34:22 ControlTrack: STATUS > ``` 标签: kubeedge 本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。

counter demo中的数据流是怎样的啊。大佬可以专门发个文章分享下

那么edgecore是watch到device的变化就会像$hw/events/device/counter/twin/update发数据吗

edgecore一般只监听mqtt数据,不主动发数据,除非有App遥控它发。

web-controller-app通过crdClient修改device状态,crdClient远程调用edgecore往mqtt发数据(trackController.go里的UpdateDeviceTwinWithDesiredTrack函数)。

我现在的问题是我看到device的状态改了 但是edge端的mapper-counter没日志输出。感觉像是没收到mqtt数据、所以想知道是哪里往mqtt发数据的。

"UpdateDeviceTwinWithDesiredTrack" 这个函数是更新device状态,没什么疑问。

所以请问 "crdClient远程调用edgecore往mqtt发数据",这一步是是哪个函数里做的啊

确认一下是要看counter-app的输出,还是mapper-counter的输出。

"更新device状态"就是通过"crdClient远程调用edgecore往mqtt发数据"实现的,具体过程要找cloudcore和edgecore的源码,Controller→CloudHub→EdgeHub→EventBus→mqtt。

好的谢谢大佬

有个问题请教一下大佬

webapp只是patch了device status

然后边缘端的代码 我看只是订阅了mq的topic,那么是谁往topic发消息的啊 ,如果 edgecore发的话 又是怎么知道topic名字的呢

counter-mapper往mqtt发消息,在device.go的第29行 counter.handle(data),这个counter.handle就是main.go里的publishToMqtt函数。

edgecore也能往mqtt发消息,来与counter-mapper通信。edgecore不知道topic名字,只是按规则去订阅一些topic,其中包括$hw/events/device/<设备名>/state/update。碰巧crd配置文件里定义了一个叫counter的设备,所以edgecore会订阅$hw/events/device/counter/twin/update这个主题。